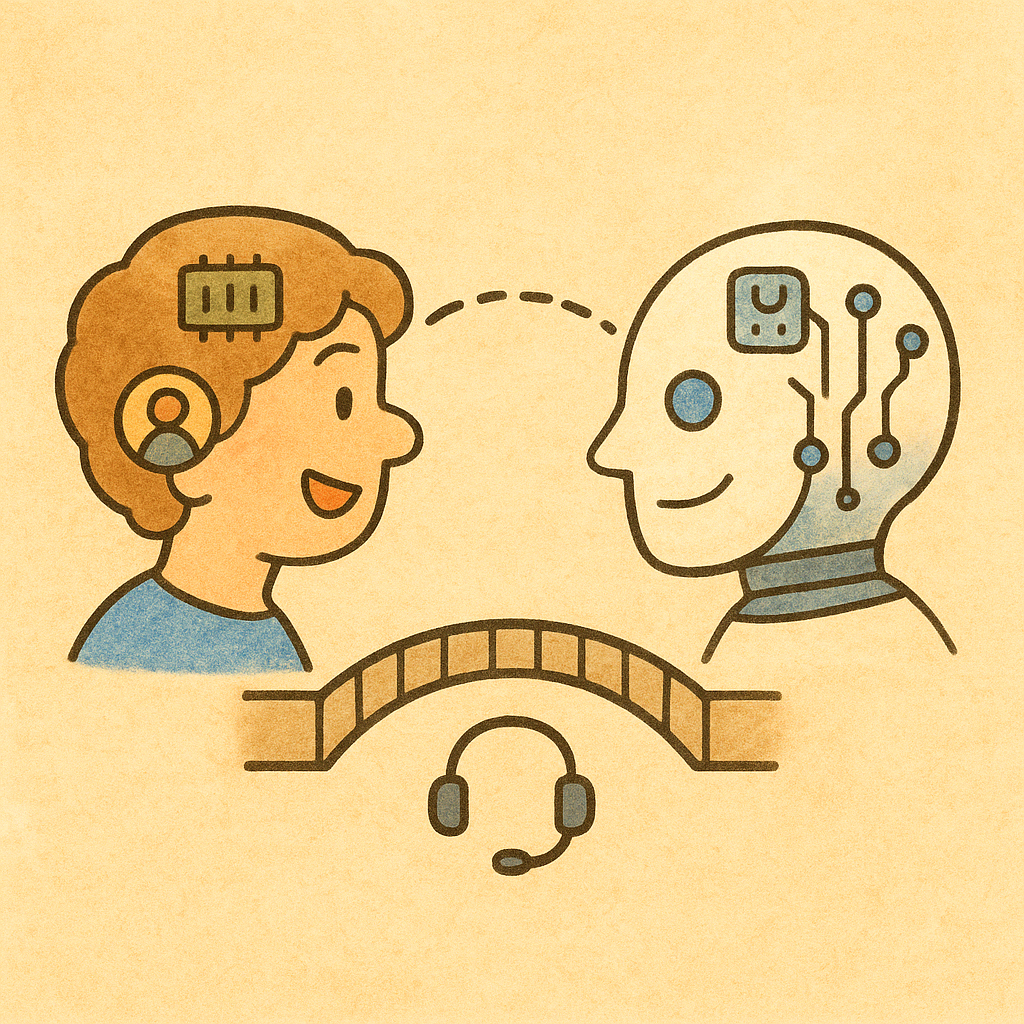

The AI Roadmap, Explained Simply

Through the Lens of Customer Support

I wanted to understand what to build as LLMs evolve—so I mapped what the human brain does and where AI is catching up. The gaps between the two reveal where entirely new products become possible.

To explain this better, I chose customer support as an example—not just because it's a widely relatable function, but because I’ve spent years building customer support products at Freshworks. I’ve learned directly from hundreds of support agents, observed how they think, adapt, and solve problems, and seen firsthand what great support looks like at scale.

This exercise was inspired in part by the book Co-Intelligence by Ethan Mollick, which explores how humans and AI can collaborate more effectively. But honestly, my best tutor for understanding the human brain was ChatGPT itself. By asking the right questions, I was able to break down complex cognitive functions and compare them with what current AI can (and can’t yet) do.

Let’s deep dive into the world of the customer support agent and AI.

1. Perception

Humans: A support agent sees your screen, hears your voice, and senses frustration instantly.

AI Today:

- Can process images (OCR), voice (speech-to-text), and text sentiment individually.

- Tools can replay sessions or co-browse for partial visibility.

Still Missing:

- These senses aren’t fused—AI doesn’t reason across voice + visual + behavior.

- It misses subtle context: hesitation in voice, cursor hover, body language (in video).

What’s Next:

- AI that synchronizes what users see, say, and do—interpreting all together.

- For example, detecting frustration via pause and screen freeze, then offering help before being asked.

2. Memory

Humans: Agents remember past tickets, tone, and preferences.

AI Today:

- Stores history in a database; retrieves via prompts or RAG.

- Fine-tuning can simulate some long-term behavior.

Still Missing:

- No evolving, automatic memory—every recall is explicit.

- Emotional memory is absent. There's no sense of trust, rapport, or learning from past mistakes.

What’s Next:

- Persistent memory per customer—AI remembers patterns, tone, and personal details.

- Self-refreshing memory: the system decides what’s important to retain and when to forget—just like humans.

3. Attention

Humans: Focus on the real issue amid scattered complaints.

AI Today:

- Token-level attention: highlights important words in a given prompt.

- Good at structured inputs, weak with ambiguity.

Still Missing:

- Can’t shift focus based on tone changes or conversational pivots.

- No cross-modal prioritization (e.g., “they sound upset” overrides “they mentioned a feature”).

What’s Next:

- AI that reprioritizes in real-time—focusing on emotional spikes, behavioral cues, and goal signals.

- For example, pivoting instantly from feature education to retention save if churn signs appear.

4. Understanding Language

Humans: Understand nuance—sarcasm, passive aggression, confusion.

AI Today:

- Strong at literal meaning, weak at cultural or emotional subtleties.

- Struggles with humor, idioms, or indirectness.

Still Missing:

- Doesn’t “get” sarcasm, vague signals (“it’s fine” when it’s not), or cross-cultural tone.

- Context-switching mid-convo is error-prone.

What’s Next:

- Deep language models that parse tone, pacing, and hidden sentiment.

- Agents that react not just to what’s said—but how it’s said and why.

5. Generating Language

Humans: Adjust tone, personality, and empathy on the fly.

AI Today:

- Generates fluent replies, but tone often drifts or becomes robotic.

- Hard to maintain consistency across conversations.

Still Missing:

- Weak brand voice adherence. Struggles to match formal vs casual tone consistently.

- Doesn’t adapt based on user mood unless explicitly prompted.

What’s Next:

- Voice-controlled generation that responds in-brand, in-emotion, and in-context—automatically.

- For example, switching from apologetic to cheerful tone if the issue is resolved mid-chat.

6. Reasoning

Humans: Trace issues across symptoms—like spotting a billing error linked to a backend bug.

AI Today:

- Mimics reasoning with chain-of-thought prompts.

- Often makes plausible-sounding but incorrect guesses.

Still Missing:

- No true multi-hop reasoning across logs, systems, and actions.

- Can't audit its own reasoning or explain it clearly to users.

What’s Next:

- Step-by-step problem solvers: AI that diagnoses, explains, and adapts.

- Example: “Here’s why your invoice was wrong—it stemmed from a permissions error in your last upgrade.”

7. Planning

Humans: Plan multi-step resolutions, revise steps if things change.

AI Today:

- Can execute pre-defined flows (like macros or scripted agents).

- Struggles with adapting on-the-fly.

Still Missing:

- No internal state tracking for long plans. Loses track of goals and progress.

- Rigid sequencing, brittle recovery from user deviations.

What’s Next:

- Dynamic plan builders that adapt based on context—e.g., resolving multi-user setup across multiple products.

- Goal-oriented agents that persistently work toward outcomes, not just respond to prompts.

8. Decision Making

Humans: Use experience and judgment to override rules when needed.

AI Today:

- Follows rules or policies—no flexibility without manual overrides.

- Some systems allow confidence-based escalation.

Still Missing:

- Doesn’t weigh tradeoffs or customer history.

- Lacks alignment with business values (e.g., “retention over short-term cost”).

What’s Next:

- AI that factors in policy + user value + intent—like refunding a loyal user automatically and justifying it.

- Decision engines that explain why, not just what.

9. Learning

Humans: Improve from feedback, mistakes, or new info.

AI Today:

- Learning is offline—requires retraining or fine-tuning.

- No adaptation in-session or across cases.

Still Missing:

- Doesn’t self-improve through exposure to new patterns.

- Fails to capture edge cases or correct behavior automatically.

What’s Next:

- Continuous learning agents that refine knowledge from live usage.

- For instance, flagging emerging issues from user reports and adapting guidance accordingly—without human intervention.

10. Creativity

Humans: Rethink explanations, invent analogies, write delightful responses.

AI Today:

- Can generate text or images, but often lack originality or purpose.

- Over-optimizes for probability, not creativity.

Still Missing:

- Doesn’t create new support workflows, metaphors, or interface tweaks.

- No instinct for “what might surprise and delight.”

What’s Next:

- AI that invents alternate explanations, KB formats, or onboarding flows based on user context.

- Writing support content that doesn’t feel templated, but uniquely helpful.

11. Emotions & Empathy

Humans: Detect and respond to emotions—anger, anxiety, relief.

AI Today:

- Sentiment analysis, emotion classifiers are available.

- Empathy is scripted or templated.

Still Missing:

- Doesn’t remember how someone felt last time.

- Responds in tone, not in emotional alignment.

What’s Next:

- Agents that build emotional continuity: “Last time this happened, you were frustrated. Let’s fix it quickly today.”

- Genuine, layered empathy—across time, not just in one message.

12. Social Skills

Humans: Read timing, team dynamics, and social nuance.

AI Today:

- Has turn-taking models, but no real group awareness.

- Limited escalation sensitivity.

Still Missing:

- Can’t detect passive aggression, power distance, or indirect escalation requests.

- Doesn’t understand team roles in a conversation (e.g., boss vs new hire).

What’s Next:

- AI that adjusts tone and behavior depending on who it’s speaking with and when.

- For example, toning down assertiveness when a VP joins a group thread.

13. Movement & Control

Humans: Take over screens, fix bugs live, or guide clicks intuitively.

AI Today:

- Can suggest steps, and some copilots can control UI with strict boundaries.

Still Missing:

- No fluid, safe control of real products in unpredictable environments.

- Most “control” is hard-coded or limited to test environments.

What’s Next:

- Agents that interact with actual UI dynamically—seeing what the user sees, and acting responsibly within that context.

- Example: Fixing settings or filling forms for the user while narrating actions.

14. Imagination & Simulation

Humans: Imagine consequences, simulate what-if scenarios.

AI Today:

- Can generate plausible continuations in narrow contexts (e.g., gaming).

Still Missing:

- Doesn’t simulate real-world edge cases (“What if user tries X after Y fails?”).

- Limited capacity to evaluate outcomes before suggesting actions.

What’s Next:

- Predictive agents that simulate user paths and avoid pitfalls.

- For example, warning users: “This setting will affect your billing tier—do you want to continue?”

15. Moral Judgment

Humans: Know when to bend the rule for fairness.

AI Today:

- Follows hard-coded policies. No sense of ethics or exception logic.

Still Missing:

- Doesn’t weigh ethics, culture, or situational fairness.

- Can’t explain decisions with moral reasoning.

What’s Next:

- Context-aware agents that apply fairness with flexibility—“This user’s outage lasted 4 hours; even though policy says 2, let’s offer credit.”

- Moral alignment tuned to brand, region, and use case.

16. Self-Awareness

Humans: Know when they’re unsure, admit it, and ask for help or double-check before answering.

AI Today:

- Can output confidence scores behind the scenes.

- Some systems trigger fallback flows when confidence is low.

Still Missing:

- No actual awareness of its own knowledge boundaries—it often sounds confident even when wrong.

- Doesn’t adapt behavior based on stakes (e.g., billing vs. feature queries).

What’s Next:

- AI agents that recognize when they’re unsure and act accordingly.

- Over time, these agents could track what types of queries they struggle with and adjust behavior—becoming more cautious or handing off earlier.

Final Thought

As AI grows closer to how humans think, perceive, and act, the opportunity isn’t just to replicate human support—it’s to reimagine it. The more we understand the gaps, the more courageously we can design the future.